Creating Ghost Services with Single Packet Authorization

29 Nov, 2009

Most usages of Single Packet Authorization

focus on gaining access to a service such

as sshd that is behind a default-drop packet filter. The point being that anyone

who is scanning for the service cannot even tell that it is listening - let

alone target it with an exploit or an attempt to brute force a password. This is fine,

but given that firewalls such as iptables offer well designed NAT capabilities, can

a more interesting access model be developed for SPA? How about accessing sshd

through a port where another service (such as Apache on port 80) is already

bound? Because iptables operates on packets within kernel space, NAT functions apply

before there is any conflict with a user space application such as Apache. This

makes it possible to create "ghost" services where a port switches for a short

period of time to whatever service is requested within an SPA packet (e.g. sshd),

but everyone else always just sees the service that is normally bound there (e.g.

Apache on port 80).

Most usages of Single Packet Authorization

focus on gaining access to a service such

as sshd that is behind a default-drop packet filter. The point being that anyone

who is scanning for the service cannot even tell that it is listening - let

alone target it with an exploit or an attempt to brute force a password. This is fine,

but given that firewalls such as iptables offer well designed NAT capabilities, can

a more interesting access model be developed for SPA? How about accessing sshd

through a port where another service (such as Apache on port 80) is already

bound? Because iptables operates on packets within kernel space, NAT functions apply

before there is any conflict with a user space application such as Apache. This

makes it possible to create "ghost" services where a port switches for a short

period of time to whatever service is requested within an SPA packet (e.g. sshd),

but everyone else always just sees the service that is normally bound there (e.g.

Apache on port 80).

To illustrate this concept, let's use fwknop from the spaclient system below to access sshd on the spaserver system, but request the access be granted through port 80. Further, on the spaserver system, let's verify that Apache is running and from the perspective of any scanner out on the Internet this is the only service that is accessible. That is, sshd and all other services are firewalled off by iptables. We'll assume that the spaclient system has IP 1.1.1.1, the spaserver system has IP 2.2.2.2, and the scanner system has IP 3.3.3.3.

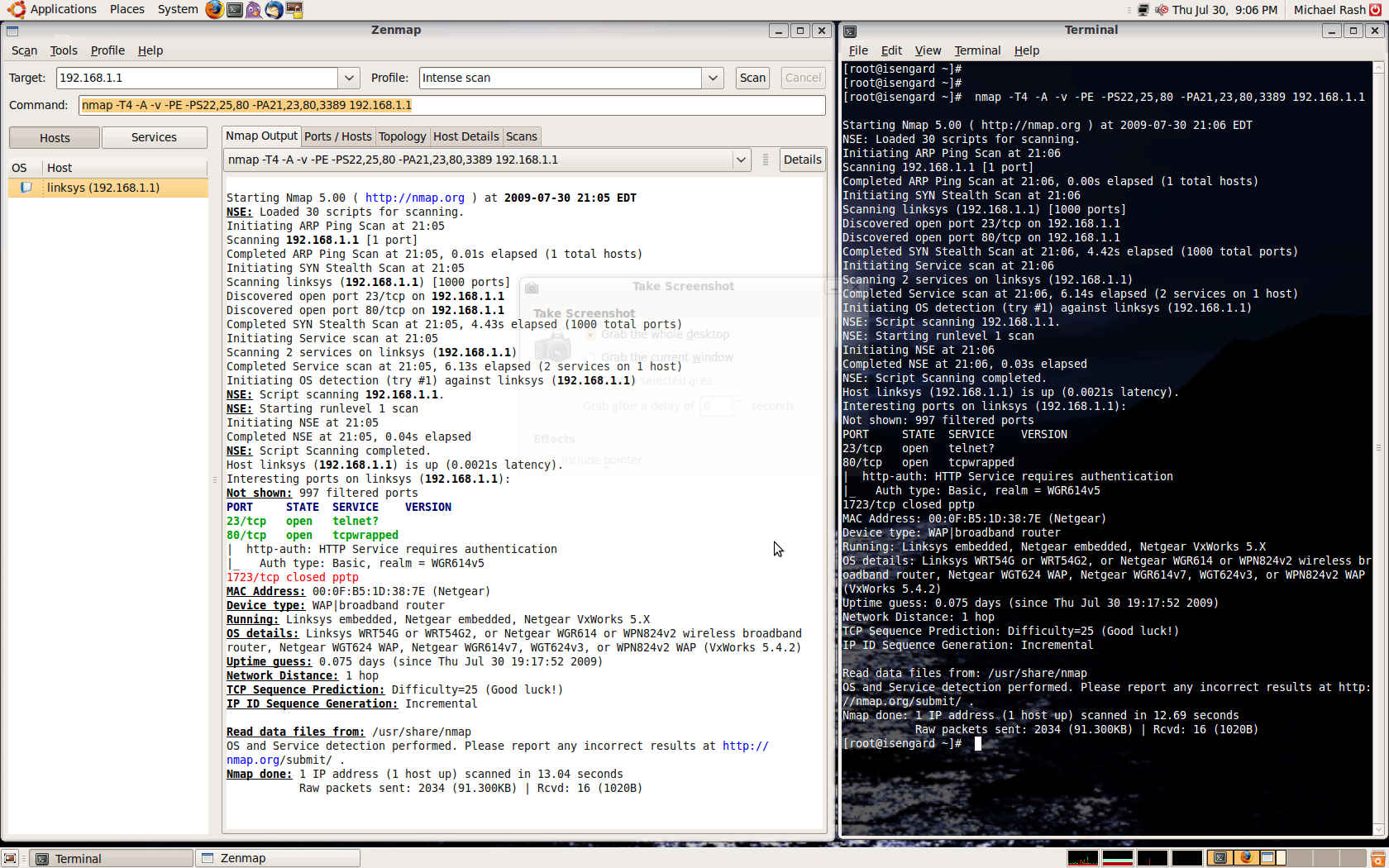

First, let's scan the spaserver system from the scanner system and verify that only port 80 is accessible:

[scanner]# nmap -P0 -n -sV 2.2.2.2

Starting Nmap 5.00 ( http://nmap.org ) at 2009-11-29 15:14 EST

Interesting ports on 2.2.2.2:

Not shown: 999 filtered ports

PORT STATE SERVICE VERSION

80/tcp open http Apache httpd 2.2.8 ((Ubuntu) mod_python/3.3.1 Python/2.5.2)

Service detection performed. Please report any incorrect results at http://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 90.94 seconds

Good. Now, from the spaclient system, let's fire up fwknop and request access to

sshd on the spaserver system. But instead of just gaining access to port 22, we'll

request access to port 22 via port 80 - the fwknopd daemon will build the

appropriate DNAT iptables rules to make this work:

[spaclient]$ fwknop -A tcp/22 --NAT-access 2.2.2.2:80 --NAT-local -a 1.1.1.1 -D 2.2.2.2

[+] Starting fwknop client (SPA mode)...

[+] Enter an encryption key. This key must match a key in the file

/etc/fwknop/access.conf on the remote system.

Encryption Key:

[+] Building encrypted Single Packet Authorization (SPA) message...

[+] Packet fields:

Random data: 3829970026924871

Username: mbr

Timestamp: 1259526613

Version: 1.9.12

Type: 5 (Local NAT access mode)

Access: 0.0.0.0,tcp/22

NAT access: 2.2.2.2,80

SHA256 digest: Om/GsIVQIRyAp6UWyqjXVqlEQhxz+lVsQhCl1rFBfuI

[+] Sending 182 byte message to 2.2.2.2 over udp/62201...

Requesting NAT access to tcp/22 on 2.2.2.2 via port 80

With the receipt of the SPA packet, the fwknopd daemon has reconfigured iptables

to allow an ssh connection through port 80 from the spaclient IP 1.1.1.1 (note

the "-p 80" argument on the ssh command line):

[spaclient]$ ssh -p 80 -l mbr 2.2.2.2

mbr@2.2.2.2's password:

[spaserver]$

If we can the spaserver again from the scanner system, we still only see

Apache:

[scanner]# nmap -P0 -n -sV 2.2.2.2

Starting Nmap 5.00 ( http://nmap.org ) at 2009-11-29 15:29 EST

Interesting ports on 2.2.2.2:

Not shown: 999 filtered ports

PORT STATE SERVICE VERSION

80/tcp open http Apache httpd 2.2.8 ((Ubuntu) mod_python/3.3.1 Python/2.5.2)

Service detection performed. Please report any incorrect results at http://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 89.22 seconds

So, the IP 1.1.1.1 has access to sshd, but all the scanner can ever see is

'Apache httpd 2.2.8'.

A legitimate question at this point is 'why is this useful?'. Well, I have been on networks before where local access controls only allowed outbound DNS, HTTP and HTTPS traffic, so this technique allows ssh connections to be made in a manner that is consistent with these access controls. (This assumes that HTTP connections are not made through a proxy, and that the fwknopd daemon is configured to sniff SPA packets over port 53.) Further, to anyone who is able to sniff traffic, it can be hard to figure out what is really going on in terms of SPA packets and associated follow-on connections. This is especially true when other tricks such as port randomization are also applied.

I demonstrated the service ghosting technique at DojoCon a few weeks ago, and the video of this is available here (towards the end of the video).

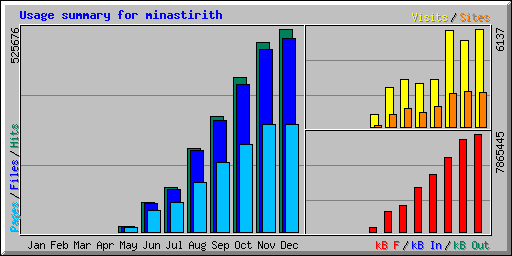

So, the average number of hits goes from 803 starting out in May and jumps rapidly

to nearly 17,000 in December. Here are the top five User-Agents and associated hit

counts:

So, the average number of hits goes from 803 starting out in May and jumps rapidly

to nearly 17,000 in December. Here are the top five User-Agents and associated hit

counts:

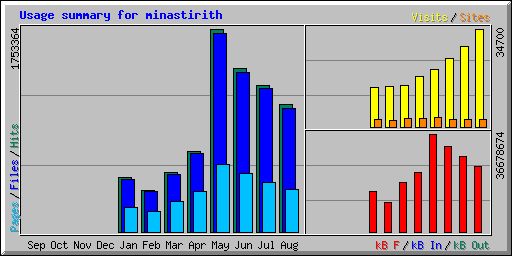

The month of May was certainly an aberration with over 56,000 hits per day, and August

topped out at 42,000 hits per day. In May, the top five crawlers were:

The month of May was certainly an aberration with over 56,000 hits per day, and August

topped out at 42,000 hits per day. In May, the top five crawlers were:

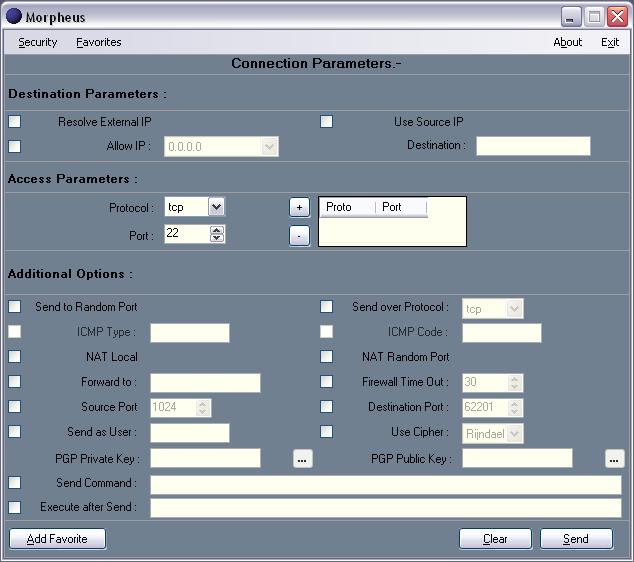

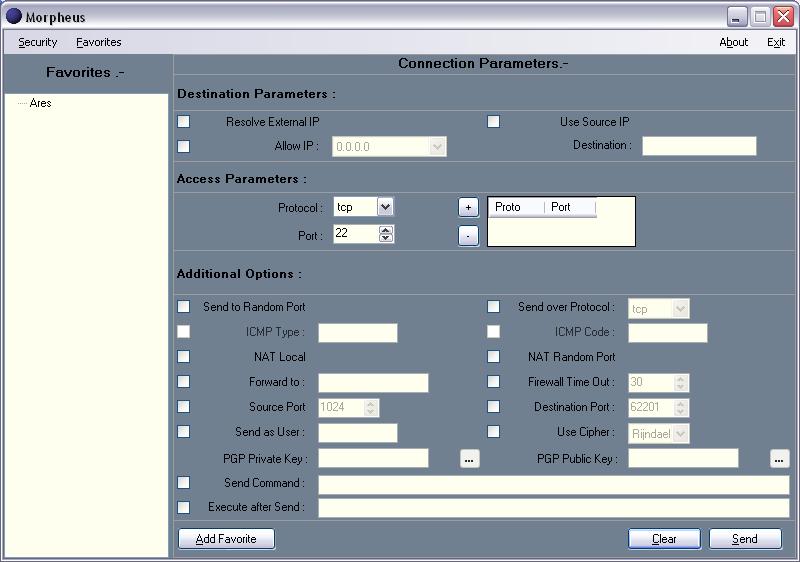

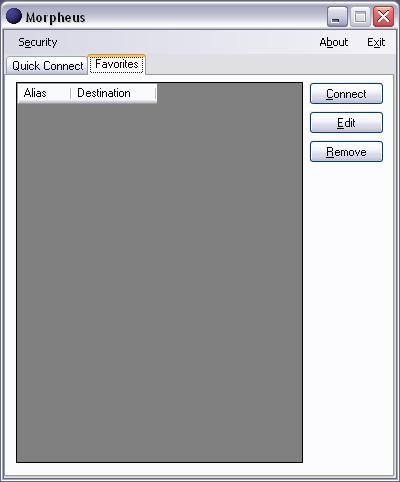

It is excellent to see work going on in the world of user interfaces to fwknop

and SPA. Without an effective UI on Windows, a large user base is effectively

cut off.

It is excellent to see work going on in the world of user interfaces to fwknop

and SPA. Without an effective UI on Windows, a large user base is effectively

cut off.