Integrating fwknop with the 'American Fuzzy Lop' Fuzzer

23 November, 2014

Over the past few months, the American Fuzzy Lop (AFL) fuzzer written by Michal Zalewski has become a tour de force in the security field. Many interesting bugs have been found, including a late breaking bug in the venerable cpio utility that Michal announced to the full-disclosure list. It is clear that AFL is succeeding perhaps where other fuzzers have either failed or not been applied, and this is an important development for anyone trying to maintain a secure code base. That is, the ease of use coupled with powerful fuzzing strategies offered by AFL mean that open source projects should integrate it directly into their testing systems. This has been done for the fwknop project with some basic scripting and one patch to the fwknop code base. The patch was necessary because according to AFL documentation, projects that leverage things like encryption and cryptographic signatures are not well suited to brute force fuzzing coverage, and fwknop definitely fits into this category. Crypto being a fuzzing barrier is not unique to AFL - other fuzzers have the same problem. So, similarly to the libpng-nocrc.patch included in the AFL sources, encryption, digests, and base64 encoding are bypassed when fwknop is compiled with --enable-afl-fuzzing on the configure command line. One doesn't need to apply the patch manually - it is built directly into fwknop as of the 2.6.4 release. This is in support of a major goal for the fwknop project which is comprehensive testing.If you maintain an open source project that involves crypto and does any sort of encoding/decoding/parsing gated by crypto operations, then you should patch your project so that it natively supports fuzzing with AFL through an optional compile time step. As an example, here is a portion of the patch to fwknop that disables base64 encoding by just copying data manually:

diff --git a/lib/base64.c b/lib/base64.c index b8423c2..5b51121 100644 --- a/lib/base64.c +++ b/lib/base64.c @@ -36,6 +36,7 @@ int b64_decode(const char *in, unsigned char *out) { - int i, v; + int i; unsigned char *dst = out; - +#if ! AFL_FUZZING + int v; +#endif + +#if AFL_FUZZING + /* short circuit base64 decoding in AFL fuzzing mode - just copy + * data as-is. + */ + for (i = 0; in[i]; i++) + *dst++ = in[i]; +#else v = 0; for (i = 0; in[i] && in[i] != '='; i++) { unsigned int index= in[i]-43; @@ -68,6 +80,7 @@ b64_decode(const char *in, unsigned char *out) if (i & 3) *dst++ = v >> (6 - 2 * (i & 3)); } +#endif *dst = '\0';Fortunately, so far all fuzzing runs with AFL have turned up zero issues (crashes or hangs) with fwknop, but the testing continues.

Within fwknop, a series of wrapper scripts are used to fuzz the following four areas with AFL. These areas represent the major places within fwknop where data is consumed either via a configuration file or from over the network in the form of an SPA packet:

- SPA packet encoding/decoding: spa-pkts.sh

- Server access.conf parsing: server-access.sh

- Server fwknopd.conf parsing: server-conf.sh

- Client fwknoprc file parsing: client-rc.sh

$ cd fwknop.git/test/afl/

$ ./compile/afl-compile.sh

$ ./fuzzing-wrappers/spa-pkts.sh

+ ./fuzzing-wrappers/helpers/fwknopd-stdin-test.sh

+ SPA_PKT=1716411011200157:root:1397329899:2.0.1:1:127.0.0.2,tcp/22:AAAAA

+ LD_LIBRARY_PATH=../../lib/.libs ../../server/.libs/fwknopd -c ../conf/default_fwknopd.conf -a ../conf/default_access.conf -A -f -t

Warning: REQUIRE_SOURCE_ADDRESS not enabled for access stanza source: 'ANY'+ echo -n 1716411011200157:root:1397329899:2.0.1:1:127.0.0.2,tcp/22:AAAAA

SPA Field Values:

=================

Random Value: 1716411011200157

Username: root

Timestamp: 1397329899

FKO Version: 2.0.1

Message Type: 1 (Access msg)

Message String: 127.0.0.2,tcp/22

Nat Access: <NULL>

Server Auth: <NULL>

Client Timeout: 0

Digest Type: 3 (SHA256)

HMAC Type: 0 (None)

Encryption Type: 1 (Rijndael)

Encryption Mode: 2 (CBC)

Encoded Data: 1716411011200157:root:1397329899:2.0.1:1:127.0.0.2,tcp/22

SPA Data Digest: AAAAA

HMAC: <NULL>

Final SPA Data: 200157:root:1397329899:2.0.1:1:127.0.0.2,tcp/22:AAAAA

SPA packet decode: Success

+ exit 0

+ LD_LIBRARY_PATH=../../lib/.libs afl-fuzz -T fwknopd,SPA,encode/decode,00ffe19 -t 1000 -i test-cases/spa-pkts -o fuzzing-output/spa-pkts.out ../../server/.libs/fwknopd -c ../conf/default_fwknopd.conf -a ../conf/default_access.conf -A -f -t

afl-fuzz 0.66b (Nov 23 2014 22:29:48) by <lcamtuf@google.com>

[+] You have 1 CPU cores and 2 runnable tasks (utilization: 200%).

[*] Checking core_pattern...

[*] Setting up output directories...

[+] Output directory exists but deemed OK to reuse.

[*] Deleting old session data...

[+] Output dir cleanup successful.

[*] Scanning 'test-cases/spa-pkts'...

[*] Creating hard links for all input files...

[*] Validating target binary...

[*] Attempting dry run with 'id:000000,orig:spa.start'...

[*] Spinning up the fork server...

[+] All right - fork server is up.

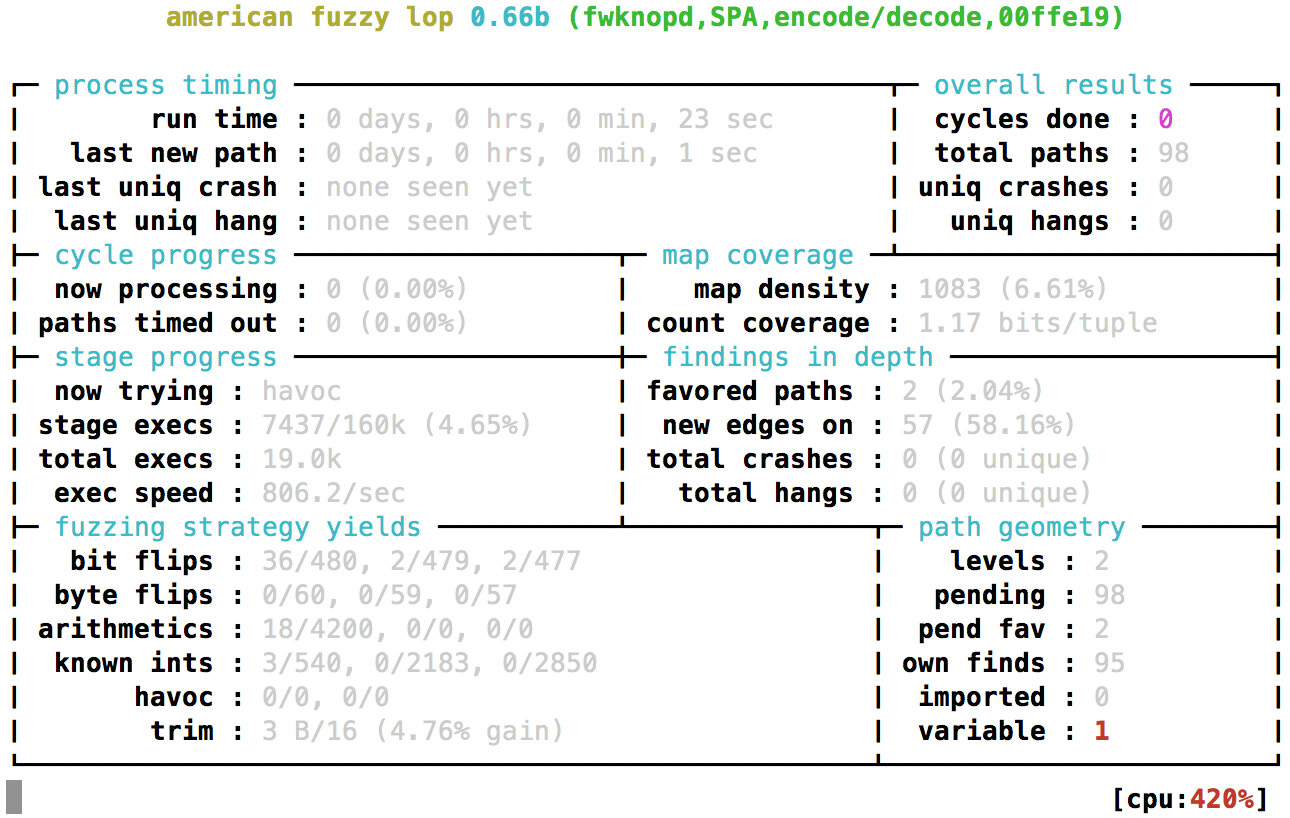

And now AFL is up and running (note the git --abbrev-commit tag integrated

into the text banner to make clear which code is being fuzzed):

If the fuzzing run is stopped by hitting Ctrl-C, it can always be resumed as follows:

If the fuzzing run is stopped by hitting Ctrl-C, it can always be resumed as follows:

$ ./fuzzing-wrappers/spa-pkts.sh resumeAlthough major areas in fwknop where data is consumed are effectively fuzzed with AFL, there is always room for improvement. With the wrapper scripts in place, it is easy to add new support for fuzzing other functionality in fwknop. For example, the digest cache file (used by fwknopd to track SHA-256 digests of previously seen SPA packets) is a good candidate.

UPDATE (11/30/2014): The suggestion above about fuzzing the digest cache file proved to be fruitful, and AFL discovered a bounds checking bug that has been fixed in this commit. The next release of fwknop (2.6.5) will contain this fix and will be made soon.

Taking things to the next level, another powerful technique that would make an interesting side project would be to build a syntax-aware version of AFL that handles SPA packets and/or configuration files natively. The AFL documentation hints at this type of modification, and states that this could be done by altering the fuzz_one() routine in afl-fuzz.c. There is already a fuzzer written in python for the fwknop project that is syntax-aware of the SPA data format (see: spa_fuzzing.py), but the mutators within AFL are undoubtedly much better than in spa_fuzzing.py. Hence, modifying AFL directly would be an effective strategy.

Please feel free to open an issue against fwknop in github if you have a suggestion for enhanced integration with AFL. Most importantly, if you find a bug please let me know. Happy fuzzing!